Concept Learning in Machine Learning: A Beginner's Guide (2025)

Concept Learning: An Introduction

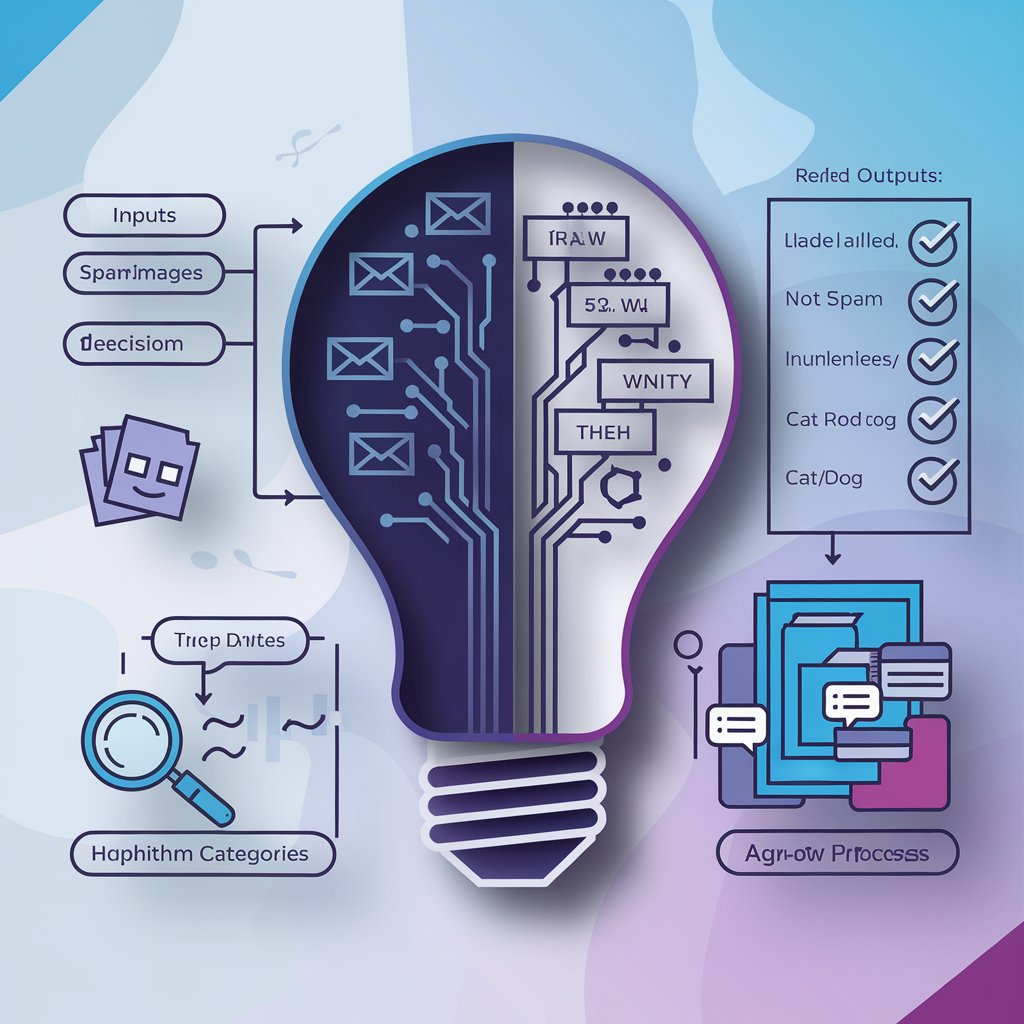

Among the basic cornerstones of machine learning (ML), concept learning is one. It is the process by which an artificial intelligence (AI) system learns to classify or predict outcomes depending on incoming data. For instance, a spam filter "learns" the idea of spam emails by examining word and sender activity patterns.

This paper will cover: ✜ What idea learning is ✜ Its action in machine learning Main algorithms employed Practical value

What is the idea of learning?

Concept learning—also known as inductive learning—is the process whereby a machine learning model postulates a concept from instances.

Main Features:

Input: Labeled training data—e.g., emails flagged "spam" or "not spam"

The result is a hypothesis predicting fresh, unseen data.

From particular cases, derive a general principle

A model trained on animal photos, for instance, learns the idea of "cat" by spotting shared traits such pointy ears, whiskers, and hair texture.

The Mechanics of Concept Learning

Conceptual learning is a methodical process:

1.Gathering training data

Collect tagged instances such as "spam" vs. "legitimate" emails.

Every instance has a label (spam/not spam) and characteristics (e.g., email subject, sender).

2. The development of theories

The model provides hypothetical possible rules to categorize data.

For instance, "An email is spam if it has 'free offer.'"

3.Examining Hypotheses

The model evaluates hypotheses using training data.

Wrong theories are either abandoned or modified.

4. Generalizing

The best hypothesis is chosen to forecast fresh, unknown data.

Important Algorithms for Concept Learning

Many ML algorithms stress idea development.

Method Its Operation Decision tree usage Divides data into branches following rules Forecasting client loss

Simple Bayes Classifies by way of probability k-Nearest Neighbors (kNN) detection of spam Classifies depending on comparable situations Medical diagnostics assist vector machines (SVM) Identifies ideal selection boundary Picture recognition

Practically useful :

Concept learning drives many of the daily AI technologies we use:

Learns from tagged emails the idea of "spam."

Finds questionable relationships and patterns in advertising language.

Trains on patient data to forecast diseases (e.g., diabetes risk).

Should blood sugar exceed X and BMI exceed Y, forecast diabetes.

Detecting fraud Using concept learning, banks highlight dubious transactions.

Learns from previous fraud events to identify fresh ones.

Picture Recognition

Classifies items—e.g., cats vs. dogs—based on pixel patterns.

Issues with Concept Learning

Though good, concept learning has drawbacks:

Overfitting Apply regularization and cross-validation.

Data either missing or noisy Imputation should be done on clean data and missing values. Maintain fair testing and balanced data sets

Concept Learning Compared to Other Machine Learning Techniques

In what ways does concept learning contrast with other approaches?

Learning of Feature Ideas Deep learning:

Required Data Small to moderate datasets Big datasets

Understanding Clear guidelines: High Low ("black box")

Best for Structured problems Complicated patterns—for example, NLP

Using Python, DIY: Applying Concept Learning Scikit-learn can be used in this straightforward situation.

Pythonic:

Import Decision Tree Classifier from sklearn.tree.

Training data: from sklearn.tree import DecisionTreeClassifier

# Training data: [feature1, feature2], label

X = [[0, 0], [1, 1], [0, 1], [1, 0]]

y = [0, 1, 0, 1] # 0 = "Class A", 1 = "Class B"

# Train model

clf = DecisionTreeClassifier()

clf.fit(X, y)

# Predict

print(clf.predict([[1, 1]])) # Output: [1] (Class B)

Train model clf = DecisionTreeClassifier() clf.fit(X,y)

Projecting [1] Class B Last concepts:

Many ML systems are built on concept learning, which enables AI to generate predictions by learning from examples. Suitable training and algorithms like decision trees and SVM help to make it resilient for practical usage even if there are difficulties including bias and overfitting.